Day 10 - Building blocks of cortical areas and computation - Tony Zador, Xiao Jing Wang, Nuno da Costa

From our Sunday boat trip around Capocaccia. Photo credit: Steve Kalik

After yesterday's tour to the development of brains, we turn back to the most classical approach of looking at brains after they have formed. What features does this final form have and how do they contribute to computation and intelligence?

The first speaker is Nuno Maçarico da Costa from the Allen Institute, who has already talked to us during a discussion group a couple of days ago about their work on collecting and analysing large amounts of anatomical and functional data of the mouse virtual cortex. These data do not show transcriptomes, but just connectomes.

The sketch above shows a slice of cortex with the thalamic input coming to layer 4, and typical layer 5 "output" neuron.

This time, he will describe insights he has drawn from these data about how different cell types connect to each other.

He started by discussing connectivity patterns of inhibitory cells (he indicates them with circles while other cells are pyramids). Inhibitory neurons showed highly specific connectivity that was not so prevalent for other types of cells.

Specificity is the observation that a cell type preferentially targets or avoids another cell type. But there's different mechanism that achieve it.

What is a cell type? This is a complicated question that people will answer differently depending on context. Today when we refer to cell types we mean that cells differ in terms of morphological characteristics, but we do not case about the inputs and output they receive. One kind of type distinction would be excitatory versus inhibitory, then more specific types based on morphology.

Tony Zador added that, to him, cell types are just a convenient data reduction technique that we use to explain patterns in the connectome.

Nuno drew a slice of the cortex, divided into horizontal layers and said that we are most interested in cells living on layer 5 that form some local connections but also project their axons to other layers. He explained that they use EM microscopy, where you have a millimeter view of the primary visual cortex and other areas. In the box that he drew there are about 30000 synapses. There's also glial cells, which give some of the most profound observations.

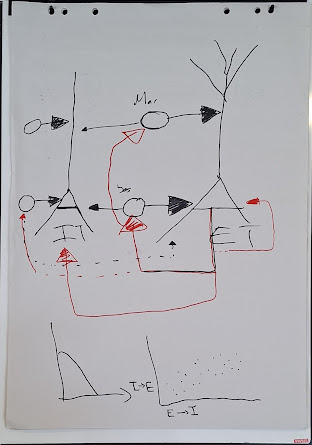

He, then, drew an illustration to explain why inferring the connectivity of these cells is difficult:

Two populations of cells that are hard to tell apartThere's two populations of cells that have the same type and connect to the same target location but start at different locations. To tell them apart you need to know both their type and target location. If you just look at their target location they look identical because you are ignoring the type.

He also drew a circuit with two cells of layer 5 with basket cells forming inhibitory connections (larger triangle means larger inhibition). One basket cells controls the input of the neuron and another controls the output. There is also a recurrent connection from the output of the neuron to the inhibitory basket cell that turns the neuron off. This is a weak recurrence that probably helps ensure that neurons near the output of the cortex are not firing too much.

The key points are

- EI pairs that are wired together have correlated synaptic strength: If an excitatory cell strongly excites inhibitory cells that inhibits the cell, then the inhibitory synaptic strength is also large.

- This specificity applies to I neurons that target specific locations, e.g. soma or dendritic tree.

- there was one more point that we missed

Basket cell inhibiting neuron on the right

He said that these basket cells are kind of surprising: we are used to short-range excitation and long-range inhibition but these work in the reverse way.

[todo: I think I missed some info on this last circuit and I dont remember what the lineplot is about]

Nuno concluding by saying that connectomes are very useful as you can analyze them to find motifs in neural circuitry. You can read more about this work in one of his papers.

Then was the turn of another scientist from the Allen Institute, Casey Schneider-Mizell, to talk about the same project.

He began by talking about different types of inhibition in the brain. You can inhibit the output spikes of axons, the soma. In insects, you can also deactivate some synapses but not the post-synaptic cell which is a way to temporarily switch off some connections. Sometimes you can also get biodirectional synapses.

[todo: I do not remember which graph he drew]

The next speaker was Tony Zador, who also talked to us yesterday about the motivation of his work: understanding how we can get connectomes with interesting features. In the human brain there are about 10^9 neurons, so the number of power connections is 10^18. This is however a worst-case scenario: in reality most of the connections do not exist. Only about 10^(-8) of the connectome entries are non-zero. Thus, the connectome is sparse and the connection becomes: what makes the expressed connections interesting?

Then, Guido de Croon, mentioned that, in addition to connectivity, cell morphology can also play a role in computation. Tony's model does not study this aspect.

Tony emphasized that synapses respond stochastically to input. This means that, once you have the connectome, you should not just consider that spikes always traverse connections but model this stochasticity somehow. The synaptic release probability can go down to 5%.

Tony also said that he does not believe that cell types exist. He just find them to be a convenient way to come up with a connectome using the approach that Wolfgang Maas also uses: you use cell types to define a matrix of connection probabilities and then randomly sample neural circuits from this ensemble.

He, then, made a drawing that, in its messiness, exemplified how messy data of connectomes that have dense connectivity in a small area look like:

He also said that deriving connectomes, using similar approaches to the ones used by the Allen Institute, requires expensive equipment and that his lab has been exploring alternative approaches that reduce the cost at the expense of reduced accuracy.

He, then, explained some of these techniques.

Brainbow is a technique where different colors are used to labers neurons, which makes tracing the axons possible. The problem with this approach is that it is difficult to distinguish 200 colors concentrated in a small area.

He, then, explained the two techniques based on DNA sequencing that his lab introduced. The DNA-sequencing technology is improving at a rate even better than Moore's law which made it a favorable candidate.

The first approach of his lab was basically replacing Brainbow's colors with DNA sequences. They put a random DNA sequence into a virus (probably called the sindis virus), inject the virus into a cell or small group of cells in a particular location, and wait for about two days for the virus to spread in the whole brain. They used 30 nucleotides so they could make 4^30 possible sequences. This is a very large number: if you randomly splash them on a brain then there is a very small probability that two neurons will get the same sequence. Unlike reading colors, reading these DNA sequences can be very easy and accurate with current technology.

In their first version, they cut the brain into cublets, put them into tubes, extract the RNA and send it to a data sequencing. Then the machine tells you what it saw in a region.

This technique allow tracing long range projection patterns (the projectome) far more easily and cheaply than would be possible by tracing individual axons over the whole brain. But it has the weakness that is give the projectome, not the connectome. They have now been working for the last 6 years to correlate this genetic tracing with physiology, which has been difficult for technical reasons of alignment and other problems.

Coming back from the coffee break, the Tobi and Rodney Douglas and Andre van Schaik started off with an announcement about the Mahowald Early Career Award prize, inviting recent PhD graduates working on neuromorphic computing to apply. Submitters should carefully read instructions and submit their work for consideration. The prize is meant to organize any good work that can justify itself as being "neuromorphic" in some way. Previous submissions failed to show how their work was inspired by brain computing principles or failed to point out the submitters individual contributions to a larger project.

After this, Tony continued with a description of the second version of their method, as the audience was very eager to learn more about the technology. The next generation is called Barsee [todo: check name]. He said that the second version is a bit more involved technically but the bottomline is that it allows you to read, not only the added sequences (that he calls barcodes) but also the genes, their spatial location and morphology. What they cannot do with this technique is infer synaptic connections: you can see whether an axon is near a cell but the accuracy is not high enough to enable detecting a synapse.

Some numbers about Tony's technique: they barcoded 200000 neurons. They cut the brain into 300 cublets. Each cublet contained 500-1000 neurons. For each cublet you get the projection pattern, which he calls the projectome (it is not a connectome because we cannot detect synapses).

There was also something that surprised him in his analysis: each cublet projected to about 80% of other cublets. Although we cannot detect synapses, an axon projecting on an area most likely means that it also form connections as this is how axons work. How do neurons decide where to project to? Initially he thought that there must be some high order effects in how they form the multiple connections but the data analysis revealed that you could just ignore interactions between cublets. If you have cublet 1 then the probability of projecting from 1 to 14 is one number and from 1 to 22 is another number. Then, the probability of projecting in both is just their products, which means that they are independent events. This was disappointing from a biological perspective but fascinating theoretically.

We wrapped up with some questions from the audience: Do delays matter? Tony does not care much about them so he does not model them. And how much do axon paths deviate from the shortest path? We do not know exactly but about 10 times, said Nuno.

The next and final speaker for today was Xiao Jing Wang from the Free University of Brussels, who talked about differences between different brain regions, such as V1 (aka the primary visual cortex) and the pre-frontal cortex (PFC). The first is responsible for processing sensory inputs while the other is involved in complex cognitive tasks that require decision-making. There is evidence that neurons in the PFC are not just responding to input but also accumulating it, potentially helping maintain a working memory.

He, then drew some lineplots of how neurons respond to stimulus. We can tell apart a neuron with from a neuron without memory by sending a pulse and seeing for how long the neuron fires.

Comparing how neurons with different time constants react to input

In the decision-making circuits in PFC you see that the neuron shows a ramping activity when they receive a pulse. This does not happen in the V1 region where neurons need to react very quickly and have very short time constants.

Xiao said that the question motivating him: how can we understand why brains have different areas? He said he works with graph theory and does not consider spatial embeddings.

He said that most works say that connectivity goes down exponentially with distance. But he thinks that this is far from the full picture, as it does not consider the heterogeneity of different areas. He wants to instead build a dynamical model of area to area connectivity.

He, then, drew some plots to explain what we know about how different areas in the brain differ:

The horizontal axis is a hierachy of cortical areas in the mouse brain. In the first plot we see spine count. We know that each spine forms an excitatory synapse so this metric is a proxy for excitation. We see that the two areas have very different profiles [todo: which line is which area?] The plot beneath is PV density, which is the fraction of neurons that ... [todo: I did not get what is PV]. These neurons control the excitability of the area. The final one is about dopamine receptors, which are involved in reinforcement learning. Input areas such as V1 do not employ reinforcment leanring but some form of hebbian learning, so they do not need dopamine.

He, then, talked about his model of a hierarchy of time constants, where he modified the canonical cortical microcircuit from Douglas and Martin by adding these scaling laws.

We are looking at a mouse in a steady state, we insert a signal and compute its autocorrelation to get the time constant tau. Another way to do it is to insert a pulse and measure the time constant of each area (where we compute the time constant across al l neurons and average):

How does the time constantchange with area? Xiao got a curve that looks linear.

A comment from the audience was that, if the animal was not at its resting state, then dopamine would have affected these results.

Why do different regions employ different constants? Xiao's explanation is based on predictive coding. He said that in order to predict you often need a temporal context. So areas involved in decision-making will need slow time constants to integrate information over time. Whereas areas involved in sensing will need quick constants for reflexes, as we already said.

*****

In the afternoon session Roman Bauer from University of Surrey talked to us about his work on computational modelling of brain development.

He described different ways of growing networks that have similar properties to biological ones, such as small-worldness. He used examples are preferetnial attachment and, in general, methods that do not consider spatial locations for neurons.

He also explained some insights from his own models, such as the fact that considering neuron death was necessary to simulate cortical layer formation. He also said that he is currently exploring growth models using spiking neural networks and described the BioDynamo software framework that he developed in collaboration with CERN in order to get a platform very efficient simulation of biological processes such as growth.

.jpg)

.png)

Comments

Post a Comment